OK, here we go! Problem of the Week # 2 is live!

For background on this initiative, check out this post and this weeks launch video that Brian and I did on YouTube.

Background on This Week’s Problem

Power Query is a data transformation and data preparation engine. Using Power Query, you can perform the extract, transform, and load (ETL) processing of data.

Frequently, when building dimension tables you can take advantage of existing data on the internet. However, while often you can find exactly the data you need, it may not be optimally structured for usage in Power BI. This week’s problem is taken from a real-world case just last week, when for a mapping report the best data source was available in a text file with a somewhat unusual structure.

Power Query is built for reshaping this type of data into a structure well-suited for a star schema data model within Power BI.

Your Task

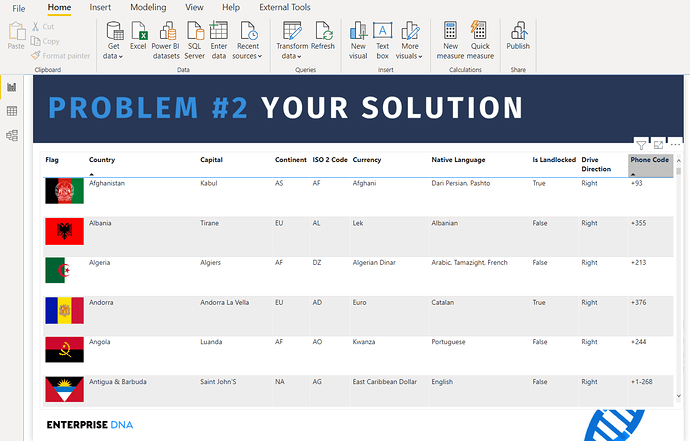

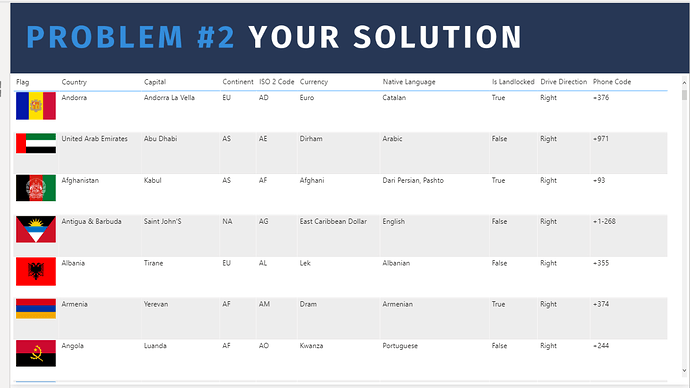

This week’s Problem of the Week challenges you to reshape data in the provided txt file into a clean and proper Dimension table that’s suitable for analysis.

Key areas to cover:

- Turning data into a proper tabular format

- Trimming, cleaning and properly formatting values

As always, this initiative is all about expanding your knowledge and skills by working through practical examples. The primary focus being the process, this is also why we encourage you to do a write up.

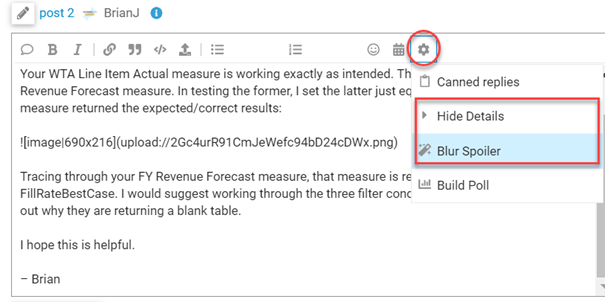

Important we ask that if you post anything directly related to a solution to please use the “Hide Details” or “Blur Spoiler” options within your post. This will prevent people who have not yet completed their solution from seeing things they might not want to see yet and could influence their solution.

To use these options, just select the text or image you want to hide, click on the gear icon on the top right at the top of your post and select either Hide Details or Blur Spoiler.

Want to stretch yourself further?

You can increase the difficulty level by examining and/or even modifying the M code in the Advanced Editor.

Note here’s how to extend your External Tools menu with a link to the M Reference Guide.

Note that this is a Power Query-only challenge, so no other tools or techniques are allowed even if that’s what you might choose to use outside of this challenge.

Eligibility for the Drawing

To encourage participation, we will be doing a drawing of five random participants each round to receive one of these cool Enterprise DNA vinyl laptop stickers:

To be eligible for the drawing, just send an email to problemoftheweek@enterprisedna.co before 11:59pm ET, Tuesday, December 22 , 2020 indicating you’ve successfully completed the challenge, and providing your mailing address for us to send the sticker if you’re selected.

We hope you find this initiative fun, challenging and helpful in continuing to build your Power BI skills. All of the information you will need is contained in files within this post. If you have any questions or comments, just message @BrianJ or @Melissa in this forum thread.

Good luck, and enjoy!!!

Melissa

P.S. Your feedback is always welcomed, and if you have a real-world problem that you think might make a good future Problem of the Week, please email that to us at problemoftheweek@enterprisedna.co.

Thanks!

.

To get started:

Download the files below, in the Power Query Editor select “Manage Parameter”

Add the full file path and name to the list and finally set it as “Current Value”.

And have fun…

data.txt (133.7 KB)

eDNA Problem of the Week 2 – Dimension Table from Text PQ Dec 2020.pbix (2.8 MB)