Okay, you are on a very good track here – I have built some very powerful financial reporting applications using a master transaction table very similar in structure to the fact table you are using here, so solid foundation to start with. With regard to your question about the three methods, you can definitely discard method #1, which fails to take advantage of the powerful data model you are building. However, methods #2 and #3 are not incompatible, and in fact I would look at these as two sides of the same coin. In some situations you’ll want to use a slicer and take advantage of the dynamic visualization aspects that you’ve noted. In other situations, you are not going to want to have to rely on a slicer, and will set the filter conditions in the measure the way you’ve done in method #2.

However, there are still two big issues to resolve here:

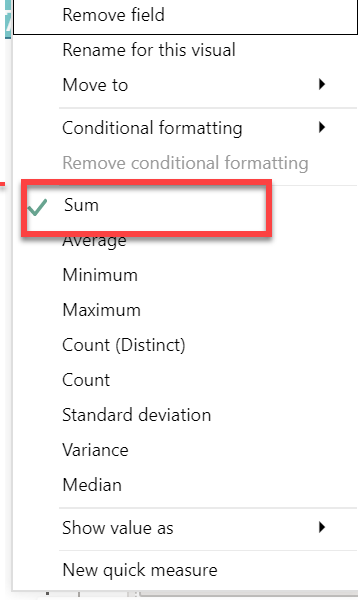

- the easy one is that you want to steer completely clear of using implicit measures like this one that you use for method #3

There are a number of features in Power BI that Microsoft has included ostensibly to make the program easier for beginners but that lead to bad practices. This is one of those cases. Instead of using the sum function in the visual, create an explicit measure – Total Value = SUM(P7RevPerMkt[Value] ). If you want to read about why explicit measure should be preferred over implicit measures, I would refer you to the thread below which references an excellent video by @sam.mckay on this topic.

Also note “Value” is not a great name to use for a column, since some advanced DAX functions return that as a column name default. Instead use something like “Amount”.

- the much bigger issue here is that you have a granularity mismatch between your calendar table and your fact table. The former is daily granularity, whereas the latter is weekly. You can connect the two tables with those date fields and it won’t return an error, but if you perform certain time intelligence functions you will get incorrect results. There are two primary ways to fix this – the first is to delete the physical relationship between the date table in the fact table and create a virtual relationship via TREATAS while allocating your fact table amounts down to a daily granularity level using DAX. Sam has many videos on this topic, since it is a very common problem in budgeting. Here’s an excellent one that walks through the allocation method to fix the granularity mismatch:

The second option is to allocate your fact table amounts daily via Power Query and then create a physical relationship between the calendar table and the fact table, which will now work fine for time intelligence because both are operating at a daily granularity.

You are in luck here, since Enterprise DNA Expert @haroonali1000 just ran a Problem of the Week exercise dealing with this exact same issue. I would refer you to this thread, where he explains the problem and then later provides a detailed solution video breakdown.

That’s probably a lot to throw at you at once, so take some time to study these issues, and give a shout back if you have any follow-up questions. As I said, I think you are on a very good track here and with some additional effort will end up with a very powerful data model.

I hope this is helpful.

– Brian