From @haroonali1000:

OK, here we go! Problem of the Week #4 is now live!

For background on this initiative, check out this post and this week’s launch video

that Brian and I did on YouTube.

Problem of the week #4

First Challenges and now problem of the week!! Don’t worry it’s just a quick guest appearance.

So this week’s problem of the week was something I was tackling back in November and while chatting it through with the Oracle (@BrianJ) he said it would make for a great problem of the week so here it is…

Granularity is a common issue we need to be able to handle, and in industry it’s common to practice to receive a mumble jumble of daily, yearly and monthly figures (common in finance, billing cycles etc).

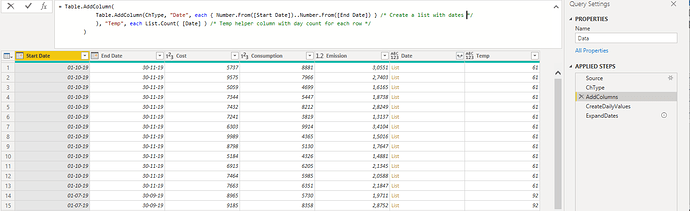

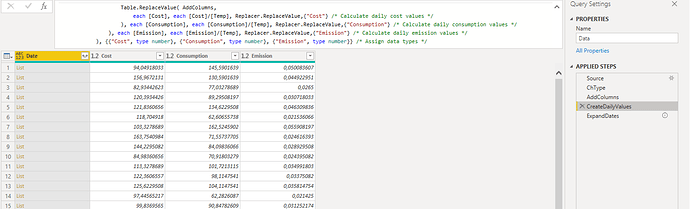

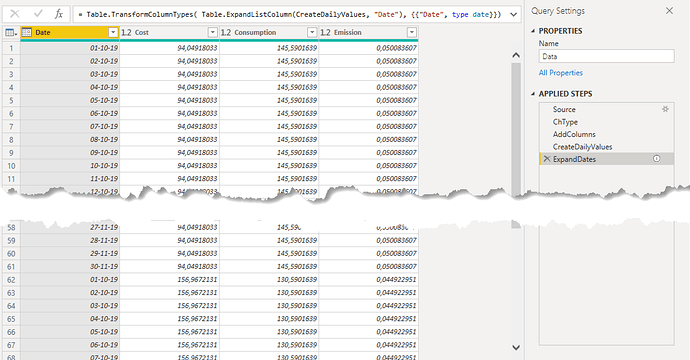

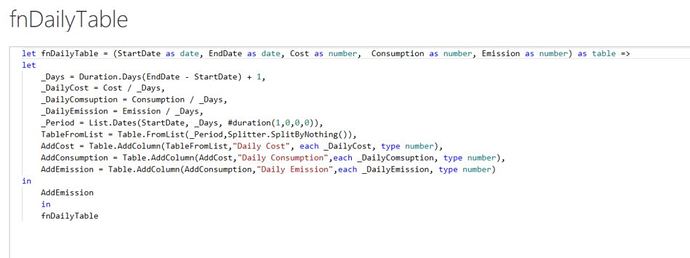

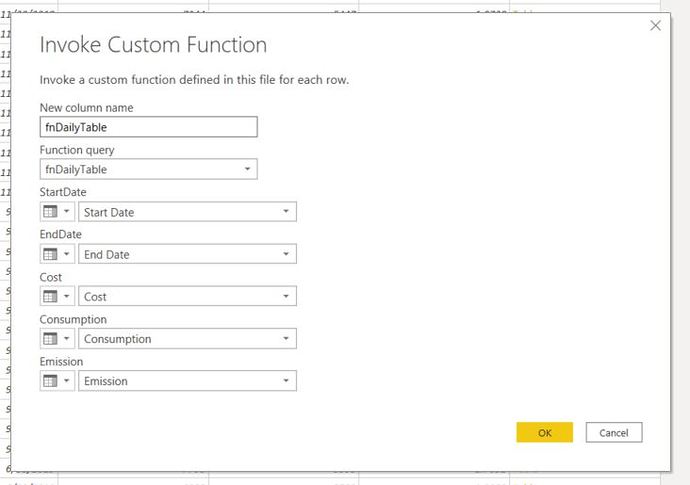

So we all know that as a general guideline, any transformations that can be done in Power Query, should be done in Power Query. There are number of methods available in DAX, so this week’s calling is to complete this challenge using only Power Query.

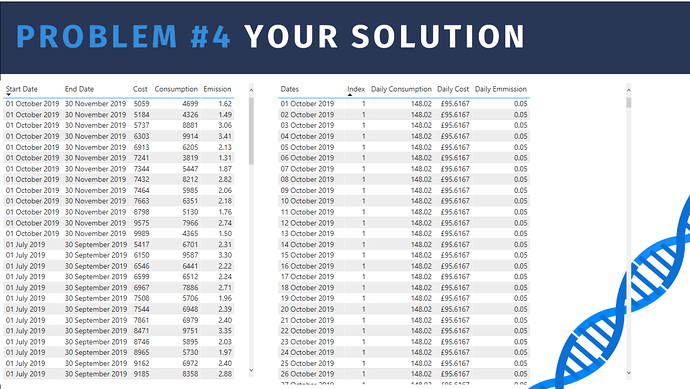

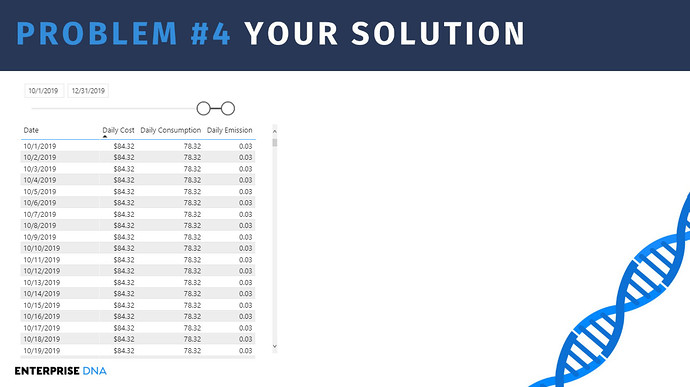

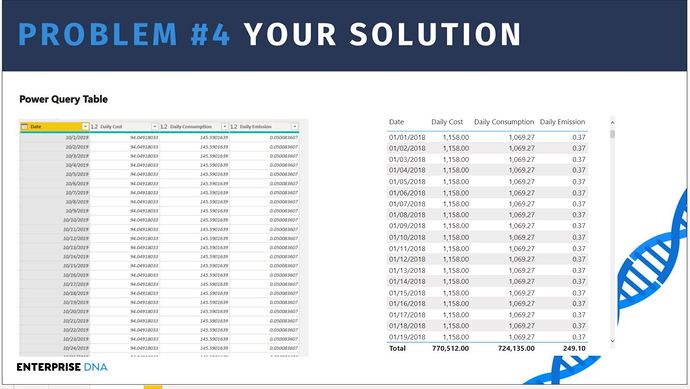

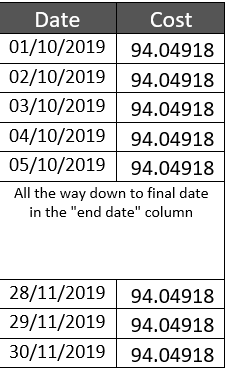

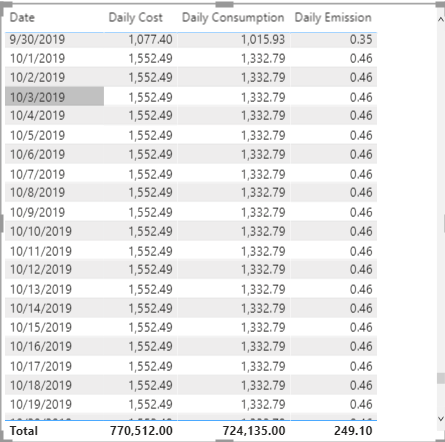

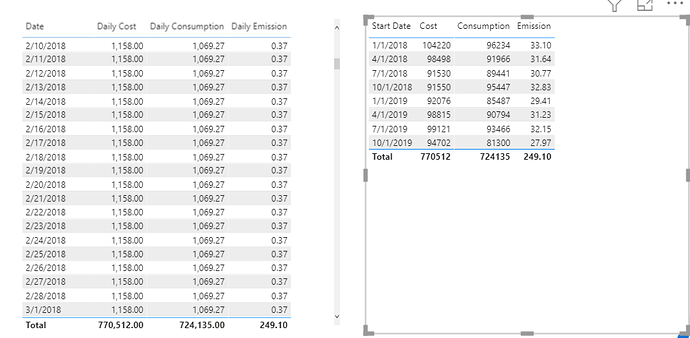

The ask is to take the monthly aggregated data and create a fact of daily granularity. I have shown below in a logical manner the result we want to achieve.

So taking the row below as an example:

![]()

The end result for this row would be as follows.

Final table should consist of the following columns: Date, Cost, Consumption, Emission

That’s all you have to do. ![]()

Over to you and good luck, looking forward to reviewing your solutions.

Any questions do not hesitate to shout.

Important

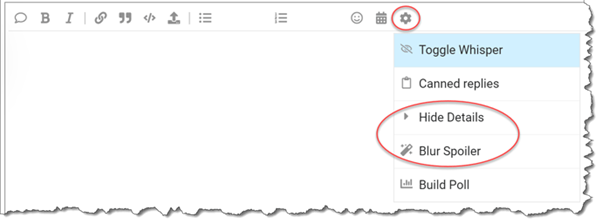

We ask that if you post anything directly related to a solution to please use the “Hide Details” or “Blur Spoiler” options within your post. This will prevent people who have not yet completed their solution from seeing things they might not want to see yet and could influence their solution.

To use these options, just select the text or image you want to hide, click on the gear icon on the top right at the top of your post and select either Hide Details or Blur Spoiler.

Note that this is a Power Query only challenge, so no DAX even if that is what you would choose to use outside of this challenge.

Eligibility for the Drawing

To encourage participation, we will be doing a drawing of five random participants each round to receive one of these cool Enterprise DNA vinyl laptop stickers:

To be eligible for the drawing, just send an email to problemoftheweek@enterprisedna.co before 11:59pm ET, Tuesday, January 26th, 2021 indicating you’ve successfully completed the challenge, and please provide your mailing address for us to send the sticker if you’re selected.

We hope you find this initiative fun, challenging and helpful in continuing to build your Power BI skills. All of the information you will need is contained in the files within this post. If you have any questions or comments, just message @BrianJ or @haroonali1000 in this forum thread.

Best of luck!!!

Haroon

P.S. Your feedback is always welcome, and if you have a real-world problem that you think might make a good future Problem of the Week, please email it to us at problemoftheweek@enterprisedna.co.

Thanks!

POTW #4 Final.pbix (492.1 KB)

Common problem indeed…

Common problem indeed…