Greetings,

I am trying to create and streamline data models that I can use repeatedly. Here is the scenario. I have multiple data tables on SQL. Frequently, request comes to extract selected data from the Tables. I want to create a data model that includes the followings:

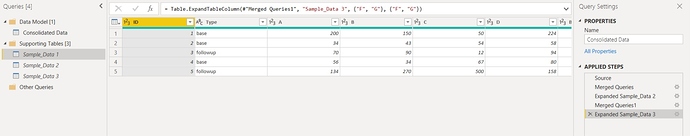

- Request Table: This table includes two key columns to join with Tables in SQL. The keys are ID and Types. Both are populated with data. The table also includes columns with no records (except for ID and Type). These columns with corresponding IDs and Types exist in one or more of the Tables in SQL. The goal is to populate records in Request Table by searching and matching columns and rows in all Tables in SQL. The key, if possible, is to search and match all columns in request table across SQL Tables at once. Attached are sample files:

Sample Request File: This file contains ID & Type data with columns A thru G with no records

SampleData1. This is one of the Table in SQL that contains same ID and Types as in Request file and also contains records from A thru C

SampleData2. This is another Table in SQL that contains same ID and Types as in Request file and contains records from D thru E

SampleData3. This is third Table in SQL that contains same ID and Types as in Request file and contains records on F & G

Desire Results Table: This table consolidate records from all three SQL Tables into one.

My questions are:

Is this scenario suitable to be created as data model in Power BI or create model in SQL?

If answer to above question is Power BI, then what’s the most efficient method to create a model that can be used repeatedly since Tables in SQL are rather statics and changes occasionally. I want to be able to plug in the request file when I receive them into data model and with few minor modifications, run the model and extract records.

Any guidance is greatly appreciated.

Thank you,

Helal

SampleData1.xlsx (8.7 KB) SampleRequestFile.xlsx (8.7 KB) Desired Results.xlsx (8.9 KB) SampleData3.xlsx (8.7 KB) SampleData2.xlsx (8.7 KB)