@JulieCarignan,

So much good stuff in this post! I feel like we are all already a project team

On the topic of data extraction: “shifting left” also applies to this. The closer to the data source you can get when extracting the required data/information, the better. Don’t be afraid to go back to the person/group that serves up the data and ask about including data parsing capabilities in the original data, such as pulling out keywords or adding boolean/bit columns if this or that condition is true or false. Sometimes a SQL view is all that is needed, or maybe using custom fields in the source system to capture required discrete data values.

Even within Power BI, if you can use a SQL query to extract the data from the data source, you could use simple syntax such as "is_deployed = CASE WHEN value LIKE ‘%delivered%’ THEN 1 WHEN value LIKE ‘%replaced%’ THEN 1 … ELSE 0 END.

There is very likely something similar in M that will do that. I found this example using Coalesce in M (which also exists in T-SQL although not exactly the same). This requires the same foreknowledge of the keywords you are looking for as the SQL example above, but it will be much easier to add new keywords to the list to search for over time.

I also found the SEARCH function on Microsoft’s site. This can be checked for a non-blank/0 value to indicate that a search keyword exists so that a boolean value can be set.

In one particular student information system report, I have used the ETL capabilities in Power BI to replace numerous abbreviations and misspellings of town and address values with “official” values. I have about 25 “Replaced Value” and related steps. I used this despite using SQL to extract the data because the Transform Data UI is easier to add changes to over time.

These methods are a bit like a hammer: simple, effective for what they do, but potentially a performance drag if the task is very large (i.e. tons of records, tons of text, and/or tons of keywords to find). That’s where the AI path that @Melissa mentioned would be the purpose-specific nail gun. I have not had the opportunity to work with that functionality, so I can’t comment on it, but it’s worth some research.

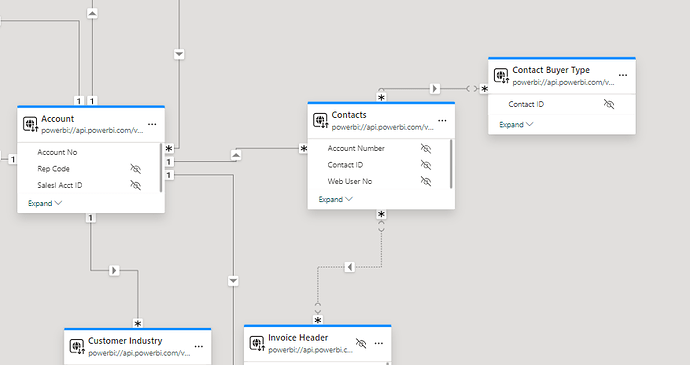

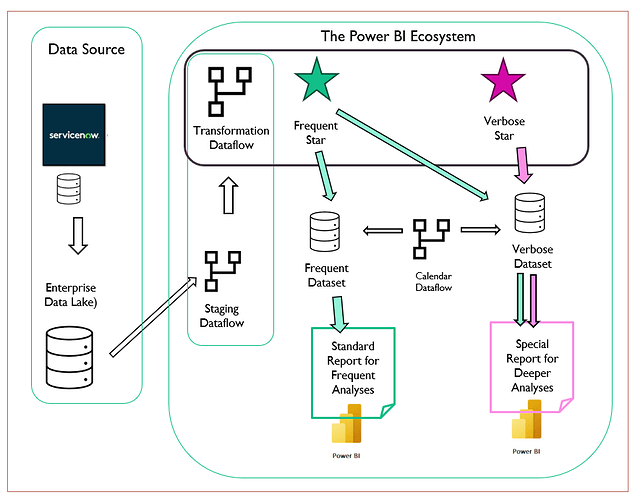

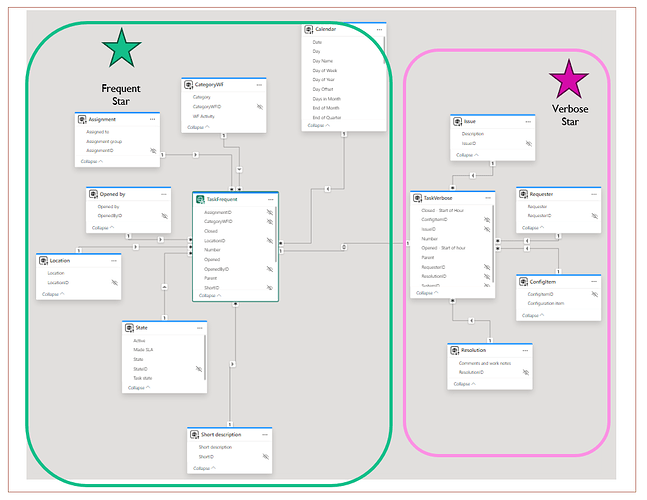

On the topic of ticketing systems and associated data models: I have developed many of them over my career, including 3 at my current employer, that tick all the boxes mentioned by you and @Heather. In particular, I use a core table with “standard” fields, although not all ticket types use all of them, plus extension tables for truly custom fields by ticket type. Then I have events underneath every ticket. My Power BI reports for them use a snowflake model with the tickets and events as the fact tables. I can provide a picture of my data model if desired.

I definitely recommend mimicking that structure in your model, which will allow you to construct all “standard” measures and associated visuals in a highly reusable manner on top of the core tables. Create additional custom fact tables and/or dimensions for specific ticket types or scenarios which can be linked to the core tables via ticket ID or similar value, and then you can develop measures, visuals, and pages within your reporting that focus on those specific ticket types/scenarios.

Or even a separate report for the scenario-specific needs as @Heather mentioned. I haven’t gotten that fancy yet  , but this would work well with the Power BI service’s “app”, which can bundle multiple reports together.

, but this would work well with the Power BI service’s “app”, which can bundle multiple reports together.

I hope this helps, and I wish you great success in your endeavor!

John