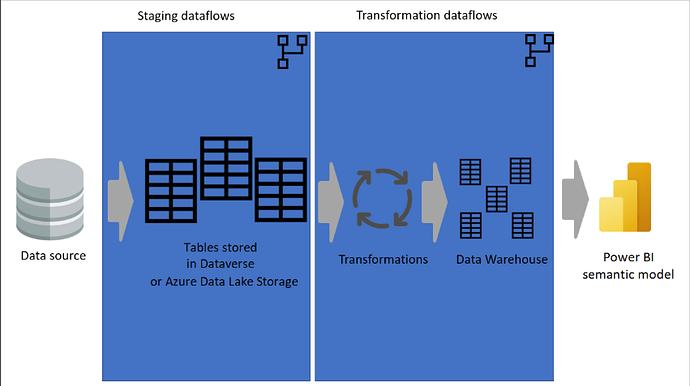

“Having some dataflows just for extracting data (that is, staging dataflows) and others just for transforming data is helpful not only for creating a multilayered architecture, it’s also helpful for reducing the complexity of dataflows.”

This is referred from the Microsoft documentation “Best practices for dataflows”

Ok, cool sounds like a great plan, except there’s one issue, it doesn’t work without a silly workaround.

As Reza Rad has documented here,

if I try to modify a downstream linked table, one gets an error:

“Can’t save dataflow

Linked tables can’t be modified. Please undo any changes made to the following linked tables and try again: {0}”

To illustrate with a basic example, I create a table in Dataflow 1 with a basic table of fruit sales, with each transaction on a separate line.

I save Dataflow1 and close it.

I then create a new Dataflow (Dataflow2) and then “Link tables from other dataflows”, loading my table from Dataflow1.

In my 2nd Dataflow, I do a simple group by on my fruit category sales.

After completing this basic group by, I am unable to save my dataflow because you can’t have downstream transformation dataflows, as per the Microsoft best practices documentation.

Maybe I should submit an idea onto the Fabric forum, that Microsoft best practices to be implemented into Power BI, what an odd request.

Am I missing something here?