Below is my explanation…

Summary

Initially it took me a while to write first 2 lines of the M code as I wanted to clean my data with minimum steps( didn’t followed what’s there initially in the sample file). People should read the blog by Chris Webb to deep dive about csv.document . Also I added ExtraValues.Ignore to help for unwanted data to be removed and we don’t get error.

= Csv.Document(File.Contents( FileLocation ),{"ColHeader", "Data"}, ":", ExtraValues.Ignore ,1252)

Now after loading the data with two columns ( ColHeader and Data ) then i cleaned each column with different set of character specific to the column. In ColHeader I trimmed comma(,) and spaces(" ") which helped me in next step as I had to filter few data. In Data column I trimmed all the possible combination i.e. spaces, brackets, comma.

= Table.TransformColumns(Source,{{"ColHeader", each Text.Trim(_ ,{","," "}), type text},{"Data", each Text.Trim( _ ,{" ",",","[","]"}), type text}})

After this few steps was to set a proper header so I filtered the required data, then proper text for ColHeader and also added custom column to replace values such as Alpha_2 …

From here I took the list of headers which I will be using later to club the set of row into table. Here I didn’t use fixed number to capture new headers in future. Added Index column to divide it with the count of Headers used M function Number.IntegerDivide. Next was grouping and I keep on applying rest of my process on the table itself without expanding the data( this is the approach I generally follow but need to see the performance too).

After all other transformation I loaded the data but I keep on track to check how much data I am loading and when i saw it showed me around 3MB of data was loaded and was surprised as the actual data was only in KB, so went back to optimize it and the first thing is adding Buffer at headers which is most common cause of loading data repeatedly. So added List.Buffer function

= List.Buffer(List.Distinct(#"Added Custom"[Header]))

And also added to next step as I was referring the previous table.

= Table.Buffer(#"Added Custom")

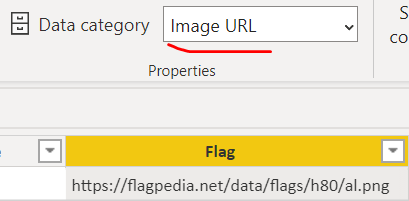

And loaded the data again, it worked faster. And then changed the data type for Flag to Image URL to get the country flag.

And added the matrix as per challenge requirement.