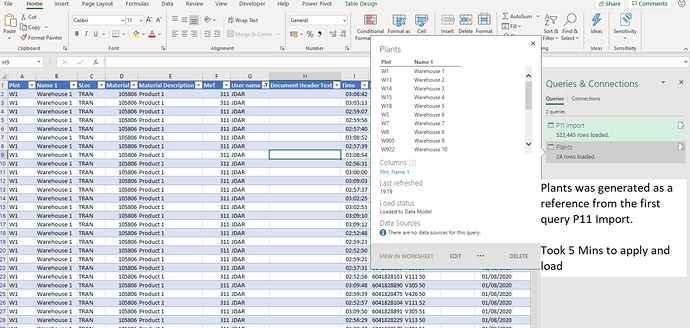

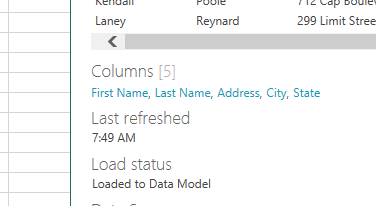

Okay Darren, I copied your table into my own Excel file, and duplicated your query steps exactly - and my time to have both tables load to the data model was under 2 minutes - I then tried loading both tables back to the Excel file, and it took about 2 1/2 minutes.

So, I’m guessing there may be an issue with processing power, or even your version of Excel?

That being said, I did go back and look at your query steps, and I think we can speed a few of them up.

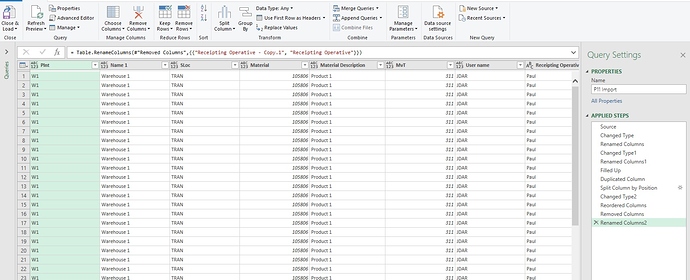

This is your code for the P11 table:

let

Source = Excel.CurrentWorkbook(){[Name=“Table1”]}[Content],

#“Changed Type” = Table.TransformColumnTypes(Source,{{“Entry Date”, type date}}),

#“Renamed Columns” = Table.RenameColumns(#“Changed Type”,{{“Qty in UnE”, “Units”}}),

#“Changed Type1” = Table.TransformColumnTypes(#“Renamed Columns”,{{“Pstng Date”, type date}, {“Time”, type time}}),

#“Renamed Columns1” = Table.RenameColumns(#“Changed Type1”,{{“Reference”, “Del Ref”}, {“PO”, “PO No”}, {“Document Header Text”, “Receipting Operative”}}),

#“Filled Up” = Table.FillUp(#“Renamed Columns1”,{“Receipting Operative”, “PO No”}),

#“Duplicated Column” = Table.DuplicateColumn(#“Filled Up”, “Receipting Operative”, “Receipting Operative - Copy”),

#“Split Column by Position” = Table.SplitColumn(#“Duplicated Column”, “Receipting Operative - Copy”, Splitter.SplitTextByPositions({0, 5}, false), {“Receipting Operative - Copy.1”, “Receipting Operative - Copy.2”}),

#“Changed Type2” = Table.TransformColumnTypes(#“Split Column by Position”,{{“Receipting Operative - Copy.1”, type text}, {“Receipting Operative - Copy.2”, type text}}),

#“Reordered Columns” = Table.ReorderColumns(#“Changed Type2”,{“Plnt”, “Name 1”, “SLoc”, “Material”, “Material Description”, “MvT”, “User name”, “Receipting Operative - Copy.1”, “Receipting Operative”, “Time”, “Mat. Doc.”, “Del Ref”, “PO No”, “Pstng Date”, “Units”, “Entry Date”, “Receipting Operative - Copy.2”}),

#“Removed Columns” = Table.RemoveColumns(#“Reordered Columns”,{“Receipting Operative”, “Receipting Operative - Copy.2”}),

#“Renamed Columns2” = Table.RenameColumns(#“Removed Columns”,{{“Receipting Operative - Copy.1”, “Receipting Operative”}})

in

#“Renamed Columns2”

compare that to my code for the same table:

let

Source = Excel.CurrentWorkbook(){[Name=“Table1”]}[Content],

#“Changed Type” = Table.TransformColumnTypes(Source,{{“Plnt”, type text}, {“Name 1”, type text}, {“SLoc”, type text}, {“Material”, Int64.Type}, {“Material Description”, type text}, {“MvT”, Int64.Type}, {“User name”, type text}, {“Document Header Text”, type text}, {“Time”, type time}, {“Mat. Doc.”, Int64.Type}, {“Reference”, type text}, {“PO”, Int64.Type}, {“Pstng Date”, type date}, {“Qty in UnE”, Int64.Type}, {“Entry Date”, type date}}),

#“Renamed Columns” = Table.RenameColumns(#“Changed Type”,{{“Qty in UnE”, “Units”}, {“Reference”, “Del Ref”}, {“PO”, “PO No”}, {“Document Header Text”, “Receipting Operative”}}),

#“Filled Up” = Table.FillUp(#“Renamed Columns”,{“Receipting Operative”, “PO No”}),

#“Extracted Text Before Delimiter” = Table.TransformColumns(#“Filled Up”, {{“Receipting Operative”, each Text.BeforeDelimiter(_, " "), type text}})

in

#“Extracted Text Before Delimiter”

Basically:

- I put all Change Type steps in one

- renamed columns only once

- it looked like your copy step on the Receipting Operative was only to split the column and retain the first name, so I removed that step and instead just did an extraction

- this meant there was no need to rearrange the columns (NOTE - column rearrange is a step that hogs resources, so avoid it if not needed to return the data in a table format in Excel)