I’m hoping to get some advice about speeding up my query.

Data source info:

• Json format

• .log files (text format)

• PBI Gateway mashup log files (you can get when additional logging in enabled)

The files themselves are not big, for my sample data there are 9 files, at around 1.5 – 2.5 mb each but the load time is quite long, around 20 minutes.

This happens when the query runs, the Table.ExpandRecordColumn line of the code. The progress monitor at the bottom of PQ shows loading tens or hundreds of MB per file.

Example below is if I have 1 file in the directory size, 1,191 KB, loading shows > 68 MB.

![]()

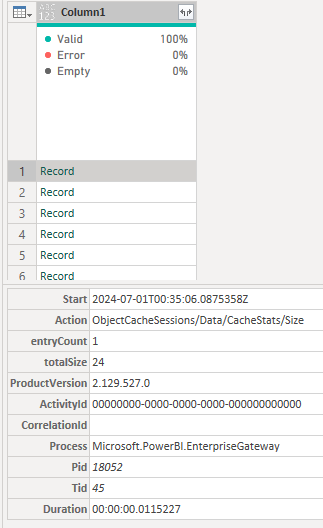

When the JSON parsed, it creates a Record for each line of JSON.

I’m assuming that, as the record is expanded, it’s held in memory before being expanded to the table.

However, I’m confused as the final PBI file is not large, less than 1MB. For this 1 sample file, the pbix file is only 119 KB.

There is quite a lot of data, just that one file creates 70 columns with 1,568 rows. The amount of data being loaded can’t be reduced.

Does anyone know why the query is using so much memory when loading even though the source file is not big and the final pbix file is also not big?

Is there any way we can speed it up?

PBIX file & sample data file attached, the data in the sample file doesn’t take long to load, but as soon as the files become larger and more numerous, the load takes much longer:

Tim GW Logs analyser post.pbix (208.8 KB)

The M code:

// FileLocation

"C:\Users\me\Documents\Data Local\My Logs" meta [IsParameterQuery=true, Type="Text", IsParameterQueryRequired=true]

// FuncProcessMashupLogs

let

// Load files from the specified folder

Source = Folder.Files(FileLocation),

// Buffer the source table to improve performance in subsequent steps

BufferSource = Table.Buffer(Source),

// Filter to include only Mashup log files and exclude irrelevant files

FilterMashupLogsOnly = Table.SelectRows(BufferSource, each Text.StartsWith([Name], "Mashup") and not Text.Contains([Name], "Container") and not Text.Contains([Name], "Evaluation")),

// Remove all columns except for the Content column

RemoveOtherColumns = Table.SelectColumns(FilterMashupLogsOnly, {"Content"}),

// Add a custom column converting binary content to text lines

AddCustom = Table.AddColumn(RemoveOtherColumns, "Custom", each Table.FromColumns({Lines.FromBinary([Content], null, null, 1252)})),

// Buffer the table after adding the custom column

BufferAddedCustom = Table.Buffer(AddCustom),

// Keep only the newly added custom table

KeepOnlyTables = Table.SelectColumns(BufferAddedCustom, {"Custom"}),

// Expand the custom table

ExpandCustom = Table.ExpandTableColumn(KeepOnlyTables, "Custom", {"Column1"}),

// Parse the JSON in the expanded column

ParseJSON = Table.TransformColumns(ExpandCustom, {"Column1", Json.Document}),

// Remove rows with errors in the parsed JSON column

RemoveErrors = Table.RemoveRowsWithErrors(ParseJSON, {"Column1"}),

// Drill down to the records in the parsed JSON column

DrillDownRecord = RemoveErrors[Column1],

// Get a list of all field names in the JSON records

FieldNames = List.Union(List.Transform(DrillDownRecord, each Record.FieldNames(_))),

// Expand the JSON records to columns

ExpandRecord = Table.ExpandRecordColumn(Table.FromList(DrillDownRecord, Splitter.SplitByNothing()), "Column1", FieldNames, FieldNames),

TableBuffer = Table.Buffer( ExpandRecord, [ BufferMode = BufferMode.Delayed ] ),

// Transform AccessedResources only if it's a list

ResourceValues = Table.TransformColumns(

TableBuffer,

{"AccessedResources", each if _ is list then Text.Combine(List.Transform(_, Text.From), " ; ") else _, type text}

),

// Transform PartitionInputs only if it's a list

PartitionValue = Table.TransformColumns(

ResourceValues,

{"PartitionInputs", each if _ is list then Text.Combine(List.Transform(_, Text.From), " ; ") else _, type text}

),

// Convert all column types to text

AllTypesText =

Table.TransformColumnTypes(

PartitionValue,

List.Transform(

Table.ColumnNames(PartitionValue),

each {_, type text}

)

),

//Add a date time key column

DateTimeKeyColumn =

Table.AddColumn(

AllTypesText ,

"DateTimeKey",

each

let

Key = Text.Combine(List.RemoveNulls(List.Transform(Text.ToList([Start]),each if Value.Is(Value.FromText(_), type number) then _ else null))),

Column = Text.Start( Key, 8) & "." & Text.End( Key, Text.Length(Key)-8 )

in

Column

),

ChangeTypes = Table.TransformColumnTypes(DateTimeKeyColumn,{{"Start", type datetime}, {"entryCount", Int64.Type}, {"totalSize", Int64.Type}, {"hits", Int64.Type}, {"requests", Int64.Type}, {"hitrate", type number} , {"DateTimeKey", type number} })

in

ChangeTypes