Hello everyone

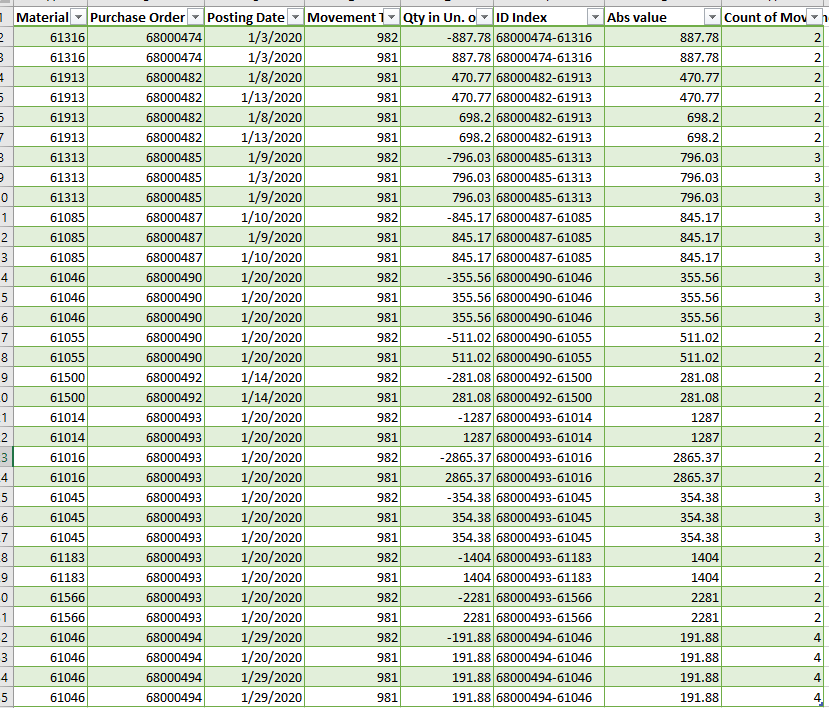

I have the following data which is the inventory movement of some products

We have 2 kinds of movement: 981 and 982’

981 are the “Entries”

982 are the mistakes in the inventory that need to take out

I added a column in my table that count the qty of movement for each product

What i need is:

1 -If the product has Count of Movement=2 and the type of movement are 981 (entries) and 982 (Out of inventory because it was a mistake) , need to eliminate both products from the table. If both movement are 981 I need to keep both products

2 If the product has Count of Movement =3 and the type of movement are differente (981 and 982) I need to eliminate from the Table the earliest 981 and 982 and only keep the latest 981 (which is the latest and correct entry in the inventory)

3 If the product has Count of Movement =4 and the movement are 981 and 982 need to eliminate all, otherwise I need to keep all ( same that with 2 count of movement)

I appreciate any tips about this Eliminate duplicates with some conditions.xlsx (15.6 KB)

Hi @Marta,

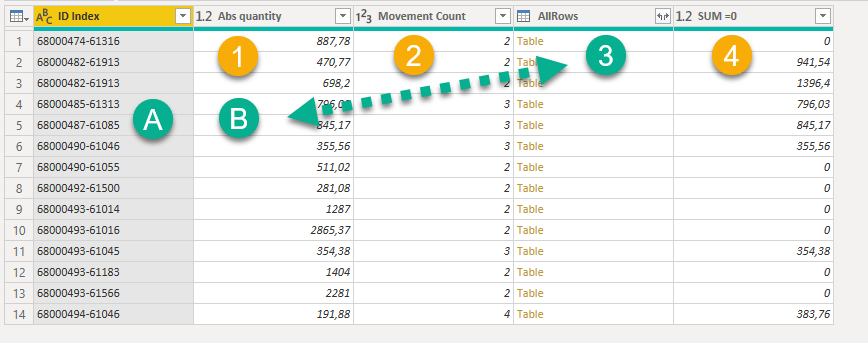

(1) Added an Abs Qty column

(A) + (B) => Grouped by ID Index and Abs Qty AND generated these additional columns

(2) Added a Movement Count (to check against your values)

(3) Added All Rows column

Next added a Custom Column (4) with a List.Sum( [AllRows][Qty in Un. of Entry] )

And finally conditionally transformed the nested table

TransformTable = Table.AddColumn(AddSumIsNull, "NestedTable", each

if [Movement Count] = 2 and [#"SUM =0"] <>0 then [AllRows] else

let

SortedRows = Table.Sort([AllRows],{{"Posting Date", Order.Ascending}}),

Partition = Table.Partition( SortedRows, "Movement Type Inventory ", 2, each _ ),

ToTable = Table.FromList(Partition, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

AddValue = Table.AddColumn(ToTable, "Value", each List.Sum( [Column1][Qty in Un. of Entry] )),

SortedValues = Table.Sort(AddValue,{{"Value", Order.Ascending}}),

CombineTables = Table.Combine( SortedValues[Column1] ),

AddIndex = Table.AddIndexColumn(CombineTables, "Index", 1, 1),

AddRunningTotal = Table.AddColumn(AddIndex, "RunningTotal", each List.Sum( List.FirstN( AddIndex[Qty in Un. of Entry], [Index]))),

FilterRows = Table.SelectRows(AddRunningTotal, each ([RunningTotal] > 0)),

CleanUpColumns = Table.RemoveColumns(FilterRows,{"Index", "RunningTotal"})

in

CleanUpColumns ),

So if the nested table contained 2 rows and the SUM of Qty in Un. of Entry <>0 then we keep the nested table as it is, else with the nested table…

- Sort the Posting Date ascending

- Partition the data on Movement Type Inventory

- Transform the resulting list of tables back into a table

- Sum up the total Qty in Un. of Entry

- Sort that Value Ascending (so the out- precedes the incomming movements)

- Combined the tables

- Added an Index

- Added a RunningTotal

- Filtered rows where the Running Total is >0

- Cleaned up helper columns

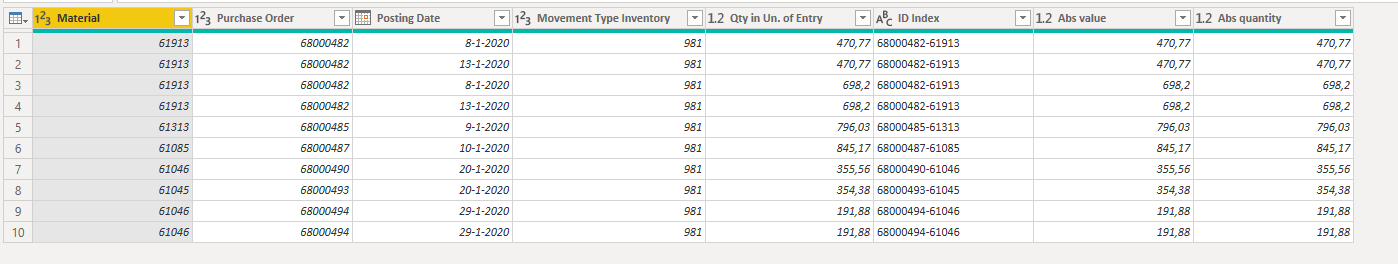

with this result.

Here is my sample file. eDNA - Remove duplicates with Conditions.pbix (23.9 KB)

I hope this is helpful.

Wow, really impressive solution. This would make a very interesting video.

I’m still working through the logic on this (having to go to the MS documentation to understand the Partition step…), but have a technique question- for the nested table transformation, is that all just custom-written code or did you create a dummy of the nested table and work out some of that in the UI?

Thanks!,

- Brian

That’s an excellent question @BrianJ

First paste this in a new blank query, it will help you with the query forensics

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("vZVBroQgEETv4rpJuoGG5iyTuf81viIMaI8D34UbQ6iqlwJUXq8lkKOwwBIEEX3069AZMhYtrsMkdn0akQhROpepsaK8YUyiZh+BErmPJzeQM8hHhBg7k6mpolyASFW6S1KVQhKw3zi7MF1oiuN6Dm9ZdWgxBcDeZWqsKBckVeguKN0EYU4Xz3YyhPqN9AzUnVo0NVeUC5TqdBd07PQfkm8fSdrSVq/OMQP3NlNzRZlCUfM/SGIeLo+JAO0JlXNFmUJR849IjNg8+avzqpSVNSCdzdRcUaZQ1PwjEpJvHvd9p8hK7EympvL8FIaqe0QJwzJWAoNTpJAXtktTMOoCY5bnYTHHHpycWDlXlCkUNf9zJBI3XB559EfQnsrzUxiq7t8UDhMvgd0vqYbZU3l+CkPV/Zty+CVtza2+4taLEaTbZP/5JxXlCqV35y5JXShPkt5/", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Material = _t, #"Purchase Order" = _t, #"Posting Date" = _t, #"Movement Type Inventory " = _t, #"Qty in Un. of Entry" = _t, #"ID Index" = _t, #"Abs value" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Material", Int64.Type}, {"Purchase Order", Int64.Type}, {"Posting Date", type date}, {"Movement Type Inventory ", Int64.Type}, {"Qty in Un. of Entry", type number}, {"ID Index", type text}, {"Abs value", type number}}),

AddAbsQty = Table.AddColumn(#"Changed Type", "Abs quantity", each Number.Abs([Qty in Un. of Entry]), type number),

GroupedRows = Table.Group(AddAbsQty, {"ID Index", "Abs quantity"}, {{"Movement Count", each Table.RowCount(_), Int64.Type}, {"AllRows", each _, type table [Material=nullable number, Purchase Order=nullable number, Posting Date=nullable date, #"Movement Type Inventory "=nullable number, Qty in Un. of Entry=nullable number, ID Index=nullable text, Abs value=nullable number, Abs quantity=number]}}),

#"68000494-61046_191,1" = GroupedRows{[#"ID Index"="68000494-61046",#"Abs quantity"=191.88]}[AllRows],

SortedRows = Table.Sort(#"68000494-61046_191,1",{{"Posting Date", Order.Ascending}}),

Partition = Table.Partition( SortedRows, "Movement Type Inventory ", 2, each _ ),

ToTable = Table.FromList(Partition, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

AddValue = Table.AddColumn(ToTable, "Value", each List.Sum( [Column1][Qty in Un. of Entry] )),

SortedValues = Table.Sort(AddValue,{{"Value", Order.Ascending}}),

CombineTables = Table.Combine( SortedValues[Column1] ),

AddIndex = Table.AddIndexColumn(CombineTables, "Index", 1, 1),

AddRunningTotal = Table.AddColumn(AddIndex, "RunningTotal", each List.Sum( List.FirstN( AddIndex[Qty in Un. of Entry], [Index]))),

FilterRows = Table.SelectRows(AddRunningTotal, each ([RunningTotal] > 0)),

CleanUpColumns = Table.RemoveColumns(FilterRows,{"Index", "RunningTotal"})

in

CleanUpColumns

.

When I first discovered the awesome power of nested tables I would add a new Custom Column to my query for each separate transformation, as I continue to get more familiar with M I find myself combining several transformations in a single Custom Column (same progression as moving from the use of helper columns to nesting functions in excel).

But when I need to transform a nested table within a nested table (like here) OR the transformations are many/complex I use that hack of “Add as new query” for the most challenging nested table - in this case the table with 4 rows. When I’ve finished with that all I have to do is incorporate the generated M code back into the base query, so the second let statement is kinda a give away…

Come to think of it I took the scenic route…

TransformTable = Table.AddColumn(AddSumIsNull, "NestedTable", each

if [Movement Count] = 2 and [#"SUM =0"] <>0 then [AllRows] else

let

SortedRows = Table.Sort([AllRows],{{"Movement Type Inventory ", Order.Descending}, {"Posting Date", Order.Ascending}}),

AddIndex = Table.AddIndexColumn(SortedRows, "Index", 1, 1),

AddRunningTotal = Table.AddColumn(AddIndex, "RunningTotal", each List.Sum( List.FirstN( AddIndex[Qty in Un. of Entry], [Index]))),

FilterRows = Table.SelectRows(AddRunningTotal, each ([RunningTotal] > 0)),

CleanUpColumns = Table.RemoveColumns(FilterRows,{"Index", "RunningTotal"})

in

CleanUpColumns ),

This will get you just the same result. I guess that’s what you get when your brain is fried LOL

Here’s my updated file. eDNA - Remove duplicates with Conditions.pbix (25.5 KB)

I hope this is helpful.

I use that hack of “Add as new query” for the most challenging nested table - in this case the table with 4 rows. When I’ve finished with that all I have to do is incorporate the generated M code back into the base query,

That’s fantastic. I’ve never used that command before, but I can see how useful that would be in building up more complex M.

The code above is really helpful - I now understand table.partition as the reverse of table.combine. Just one more question. In table.partition, for the final hash function parameter, do you ever use anything other than “each _”? (that’s the example in the MS doc as well, and I can’t think of what other value that could possibly take).

Thanks!

- Brian

Okay so let’s examine this…

To the best of my knowledge, you need to invoke whatever you are doing for each _ (record).

So something like this works as well - let me know if this is helpful.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("Hck7CoAwDADQu2Tu4l9H/3qG0iHagoXqEEXx9saMj6c11GFxdIGCCIzS0PgQXlYsajfyJysRdXg7Riro7YNkmZlw8MfByAWjIwz/FcLJ0Y7/lsL5RFxZFRjzAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Name = _t, Number = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Name", type text}, {"Number", Int64.Type}}),

myFunction = (myNum as number) => Number.RoundUp( myNum /3, 0),

Partition = Table.Partition( #"Changed Type", "Number", 3, each myFunction(_))

in

PartitionThank you very much Melissa

It works!!

Waiting for your video about it

Okay, noted on my to do list…

Make sure you’re subscribed to the eDNA YouTube Channel