Hi everyone,

I’m seeking best practices regarding dataset and dataflow maintenance in power BI. I’m a finance user acting as BI architect.

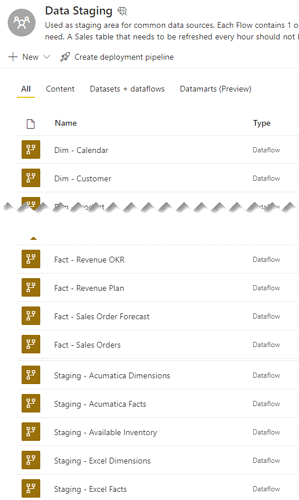

Currently I have a data staging workspace where I first stage any data for import to power bi, then break it out into fact and dimension flows. the number of flows is getting large, is there a better way or is this ok?

I have a single large dataset in another workspace with all the fact tables. Reports are built off this dataset. This dataset is becoming a pain to maintain, so I’m looking for a better way.

I could build each report’s dataset with only the flows it needs, but that would duplicate DAX calculated columns I need for analysis.

Another option is to make one dataset per fact table and build reports off these. additional datasets would be needed for multiple fact table analysis (Sales vs budget, Revenue estimate, Revenue + Open Orders + Forecasted Orders, inventory projection)

How does a BI team manage all of this?

dataflows below:

Hi Don,

I have a similar question. When setting up dataflow staging, do you need premium capacity or can you do it in pro license?

Thanks

You can set up basic flows in pro. certain features, like DirectQuery to a dataflow, computed entities, linked entities (referencing a flow from within another flow), are features of premium per user or premium capacity.

I have premium per user.

So if my company doesn’t want to get premium capacity for some reason, I am checking into it now, how can I mirror linked dataflows.

I am trying to create staging and transformation dataflows. Can the below solution work from RADACAD?

Thanks Carlos.

Reference from Another Query in Dataflow - No Premium Needed - Power Platform Dataflows - RADACAD

Hello @don_diaz ,

Did the response above help solve your query?

If not, can you let us know where you’re stuck and what additional assistance you need? If it did, please mark the solution that helped you.

Thank you

Update to this thread:

My practice for dataflows is good.

Having a single golden dataset with all fact tables is bad practice and was the cause of the pain points mentioned in the op.

Background:

I decided to keep dataflows as they are and be more liberal with report creation. Every report is a new pbix. I’ve moved away from thin reports for the time being.

I use power automate to refresh each dataset. The trigger is the upstream dataflow refresh. Only one automation is needed, the primary fact table in the report, but more than one automation is possible.

I created a golden dataset for each of the fact tables. The option is there if I want to make a quick ad-hoc thin report. But my main use case for the golden datasets is Analyze in excel.

My old golden dataset had grown to contain over 100 measures and 6 or 7 fact tables, with additional tables needed for the odd special-case visual.

Navigating these fields and measures was cumbersome in excel pivot table.

In thin reports based off this dataset, I lost track of which measures were from the golden dataset and which were local to the thin report.

Hi @don_diaz ,

Given the current status of this inquiry, can we close this thread?

If yes, please mark your response above as the Solution.

If you have any concern related to this topic, you can create a new thread.