I have a record of employee allocations, the organization allocates employees to various clients & their departments to work on their tasks

(Refer to attached sample records)basedata-r.xlsx (4.7 MB)

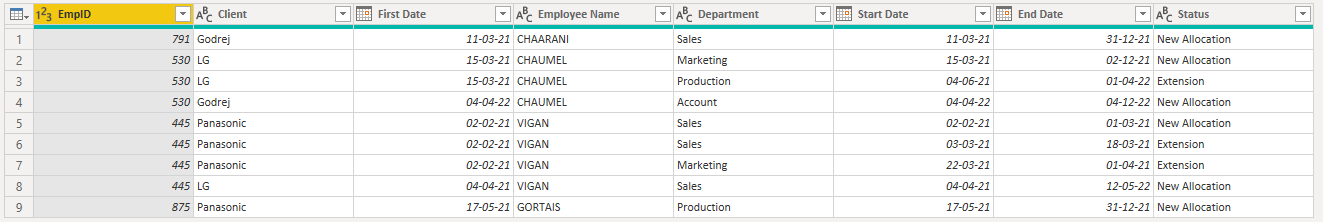

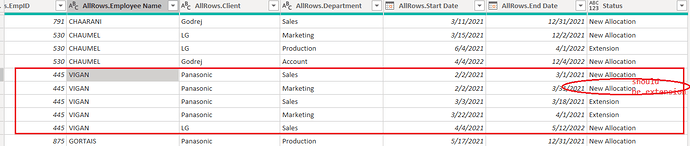

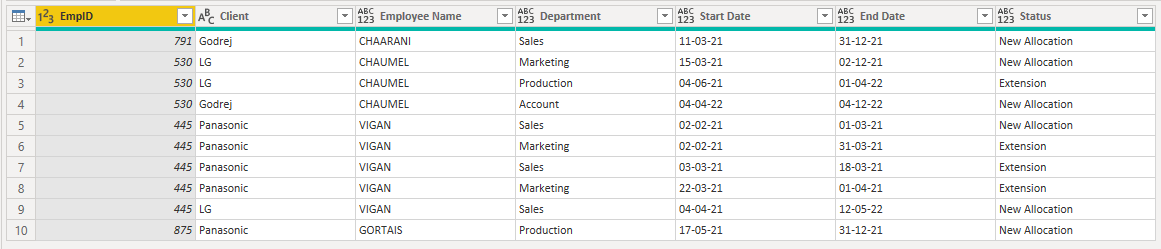

i.e. CHARANI is allocated to Godrej on 11 march to 31st Dec, it is a fresh allocation to Godrej, since there are no any previous allocation records, it is considered as “New Allocation”

i.e. Chaumel is allocated to LG on 15-March 21 (New Allocation), but again on 4-June-21 she is allocated to another department within the same client, since it is “Extension”

i.e. VIGAN is 1st allocated to Panasonic on 2-Feb-21 (New Allocation), on 3 March & 22-march he got moved to another department, but since it is within same client, this is considered “Extension”, but then on 4-Apr-21 he got allocated to LG, so considered as “New Allocation”

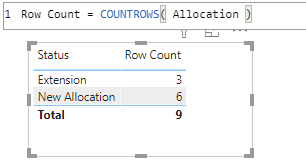

Problem Statement

Count “New Allocations” & “Extensions”

Notes

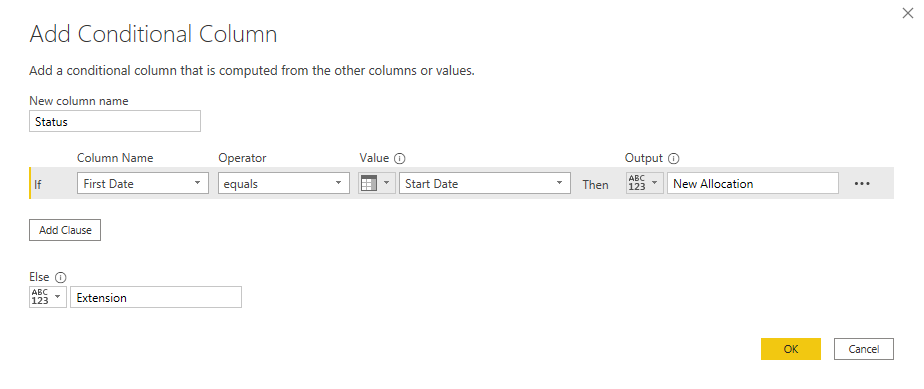

- If there is a single record in this sheet this means it is “New Allocation”

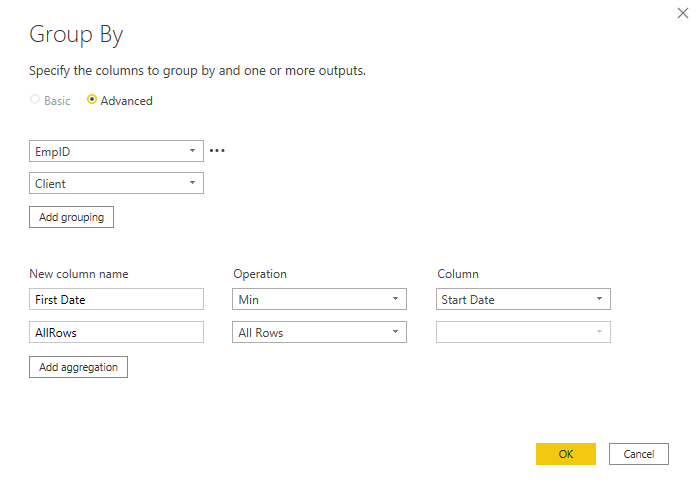

- if there are multiple records of a same person, but the client name is same, the entry on the MIN(startdate) is considered “New Allocation” & rest are “Extensions”

- if there are multiple records of a same person, every time employee is allocated to a new client, the entry on the MIN(startdate) is considered “New Allocation” & rest are “Extensions”