Hi,

I need some help with this challenge

What do I want?

I need a report with the number of customers with active products for each day.

For example:

| Date | A | A,B | B | C | Total |

|---|---|---|---|---|---|

| 09-12-2019 | 1 | 2 | 1 | 2 | 6 |

| 08-12-2019 | 1 | 1 | 1 | 2 | 5 |

| Etc. |

So on the 9th of December there is 1 customer who has only active product A, there are 2 customers who have active product A and also active product B (ActiveProductPath = A, B), there is 1 customer with only active product B and there are 2 customers who have only active product C.

Dummy file and a solution

You can find a dummy file with a solution! here: ActiveProductPathFinalv2.pbix (118.8 KB)

You have already a solution, so why this question?

The technique used as a solution isn’t working for large datasets because of memory.

Can you explain this?

The dummy file contains 14 products (= rows), with the technique used (day-customer-productpath) this becomes 922 rows (= 65,86 times 14).

I have another file with 7.769 different products and with the technique used in the dummy file the table “day-customer-productpath” became 4.965.732 rows (= x 639,25)! This wasn’t a problem for Power BI.

But now I have a file with 1.7 million different products and with this large dataset my computer gets a memory-problem…….

So what’s your question?

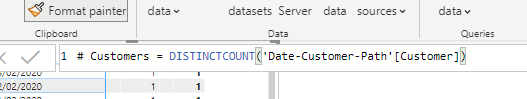

Is it possible to solve this challenge without the technique used in the dummy file? With other words, is there a DAX-solution to create virtual tables and to count customers with different “ActiveProductsPaths”?

Hope to hear from you soon.

Thanks in advance!

Regards from the Netherlands,

Cor