Pardon the length of this post. I took a somewhat unusual approach to the data modeling component of this challenge, and have gotten a number of questions about it, and the same is true for the timeline visual I included in my report. Thus, for those interested I have laid out the approach I used for each in detail, with a lot of screenshots to help explain the methodologies.

DATA MODEL

In looking at the data initially, all the tables had a many-to-many relationship to each other. However, I knew from previous financial projects I’ve worked on that if I could build a single transactions fact table that captured ordered, received and billed end-to-end with granularity at both the purchase order and materials levels, that would be an extremely powerful data modeling outcome that I could then use to create a pivoted version that would allow further powerful analysis. So, the objective became to create three tables, each using a concatenation of PO and material as the key to uniquely define records within each table. So, to see how feasible this might be, I started with the Purchased table:

REDUCING THE PURCHASED TABLE:

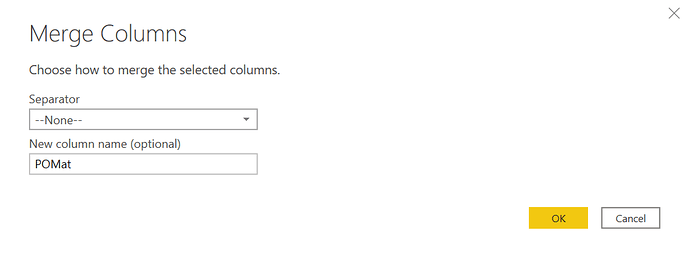

The first thing I did was create a merged key field based on PO and material:

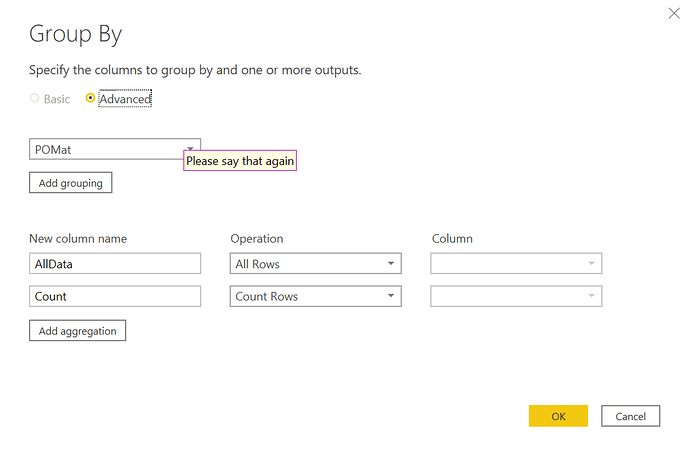

then I grouped based on this field and added a counter field to see how many records had more than one instance of the (hopefully) unique key:

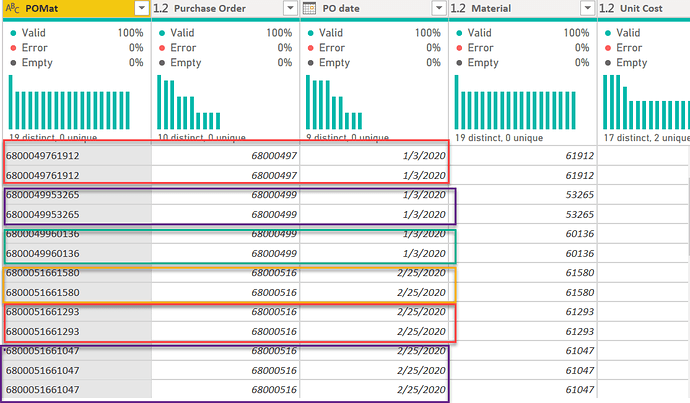

After expanding on all rows, the answer was 39 records with a count of two or more. However, in looking carefully at these records it became clear that each multiple instance still had the same PO date,

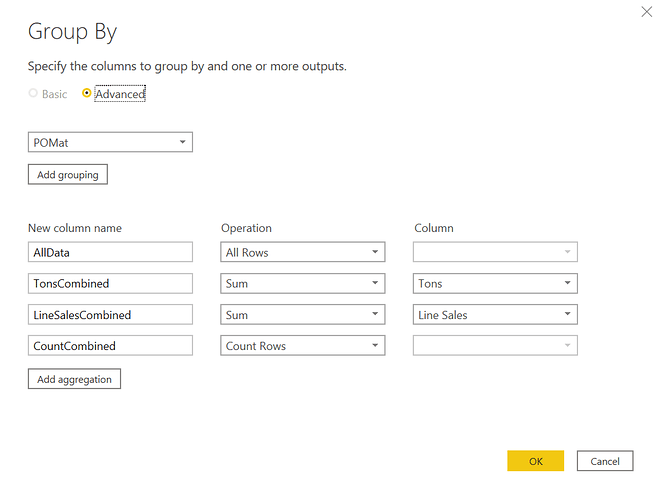

which meant that we could aggregate the amounts by our key field and recompute the average price based on the combined line and not lose any relevant information.

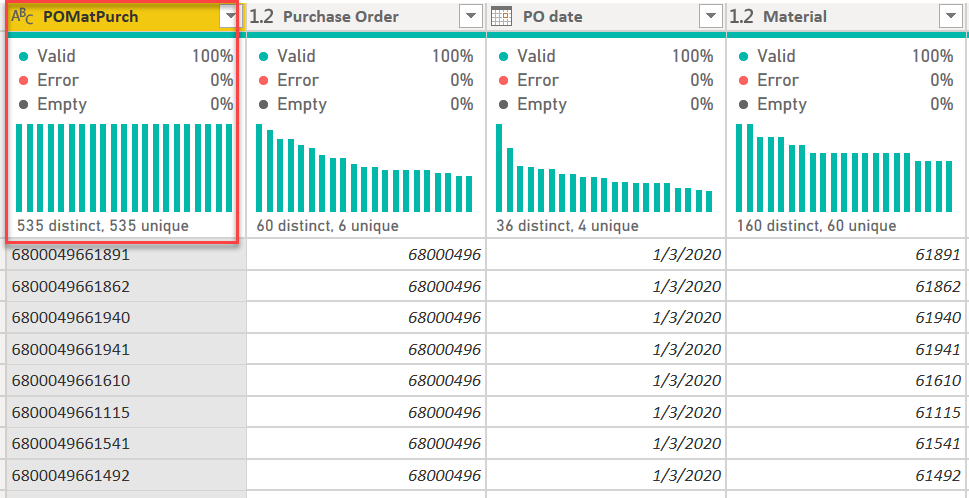

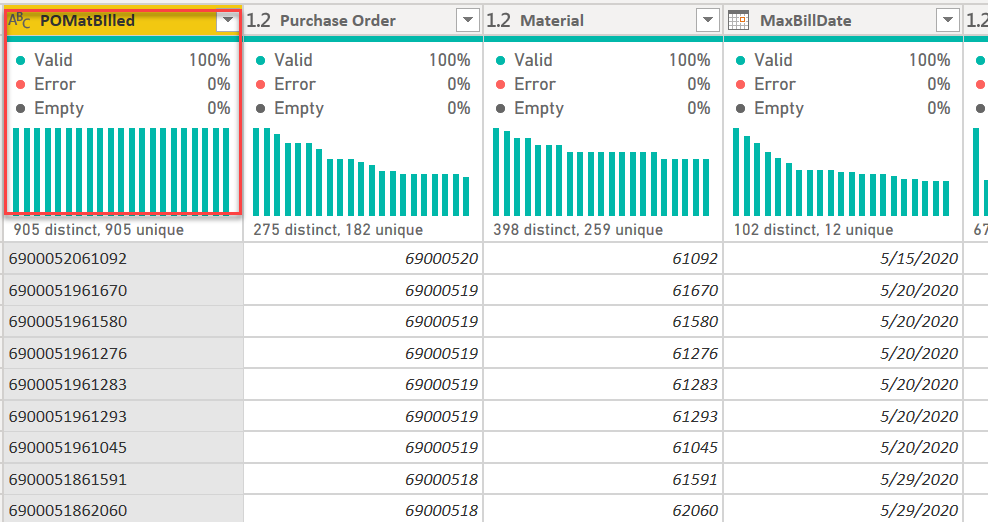

with the end result being a table of 535 records uniquely identified by our concatenated PO-materials key:

So, one down, two to go….

REDUCING THE RECEIVED TABLE:

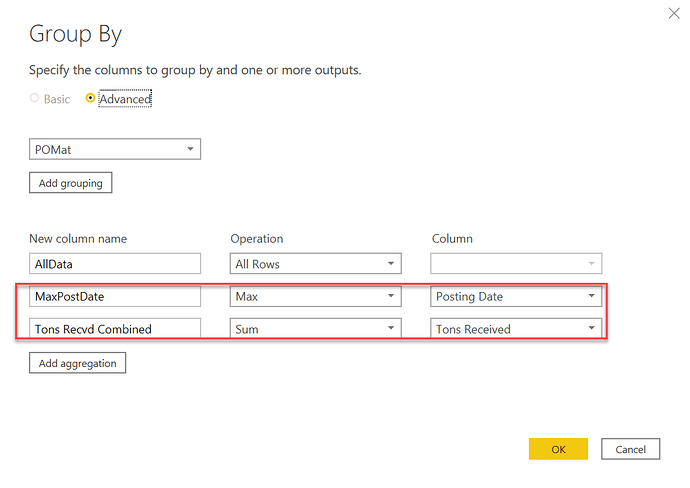

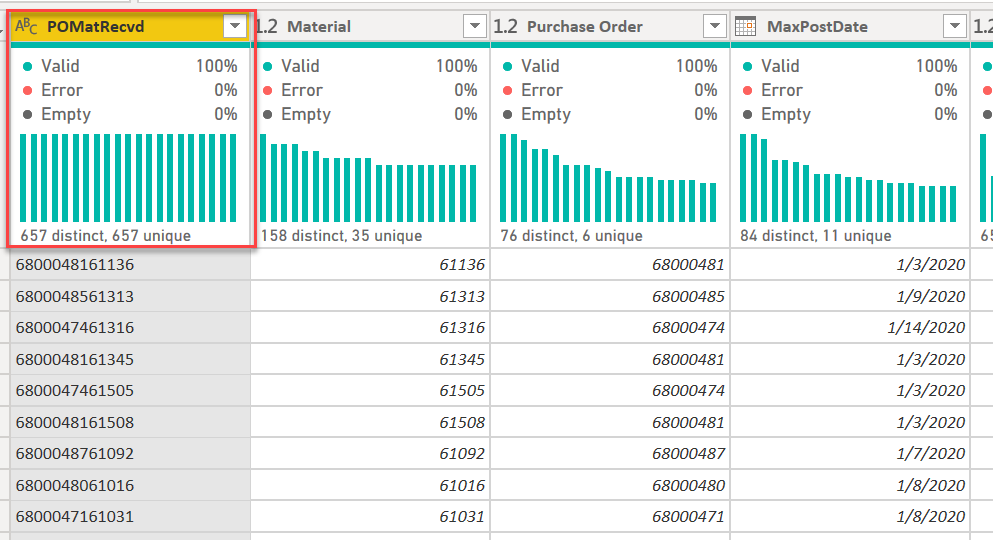

So, I ran the same analysis initially on the Received table, but this time the data were not quite as well-behaved. In this case some of the key field combinations had different received dates, and thus we couldn’t collapse the sums into a single existing date. It was at this point that I made an assumption about business rules that allowed for the creation of a one record per key table.

A word about assumptions. I feel that economists get unfairly bagged on about making assumptions (evidence that “assume a can opener” has its own Wikipedia entry). I would say two things about this: 1) making these assumptions is useful because it lets you do the thought experiment of what the minimum assumption needed to achieve the desired result is, and then evaluate whether that assumption is reasonable and what the implications of making it are; and 2) if the analytical power of the analysis is dramatically increased by making the assumption, in some cases you may actually be able to convince decision-makers to change the business process itself in a way that makes that assumption a fact. In this case, I think the assumption that the “received” date for a set of materials within a given purchase order is the maximum date on which the last material ordered was received is eminently reasonable. When you order from Amazon, and they split your purchase across multiple days of delivery, the order isn’t considered fulfilled until the last item is delivered. By making this assumption, we can now reduce the Received table down to one in which the concatenated key uniquely defines a record:

Note: in a real-world situation, I would certainly talk to the client before making this type of assumption about their business rule. In this case, where that wasn’t possible I just “assumed an agreeable client”. ![]()

two down, one to go…

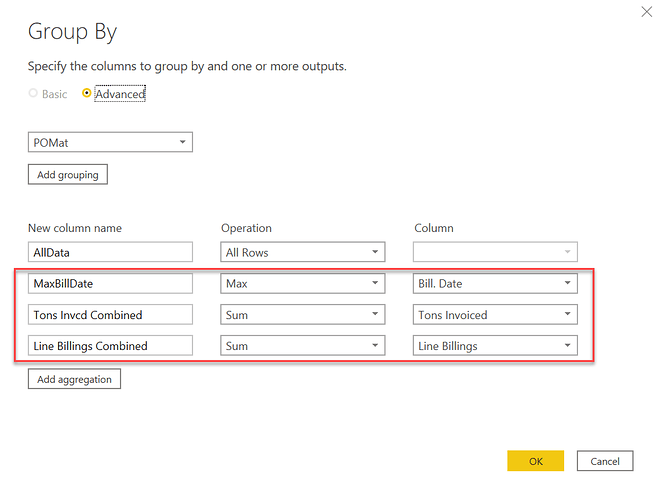

REDUCING THE BILLING TABLE

the exact same strategy used to reduce the Received table was applied to the Billing table. The business rule assumption that materials weren’t considered “billed” until the max date was made here as well.

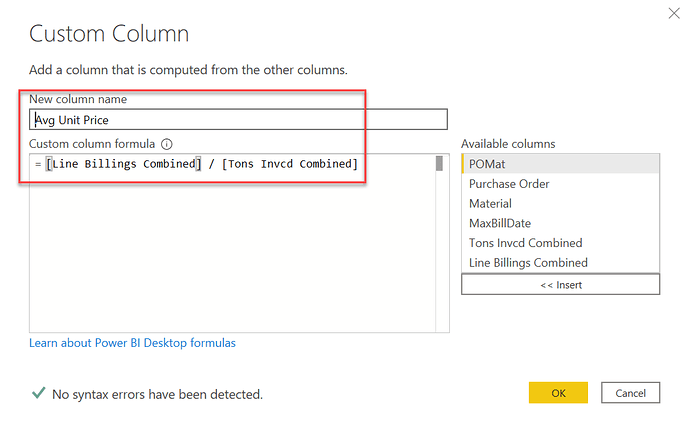

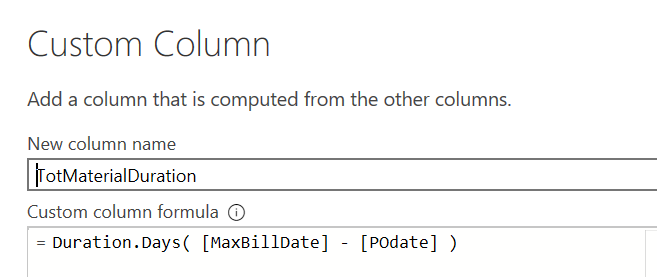

Once the aggregations and Max bill date were computed, I added a new custom column to recalculate average unit price based on the sum totals.

And we are again left with a table in which the composite key uniquely defines a record

The final step, now that we had three tables for which the composite key (concatenated PO and material) uniquely defined a record was to merge these three tables into a single Transactions table

the two merges to create the transactions table were simple left outer joins, merged on the composite key created in each of the three table reductions described above

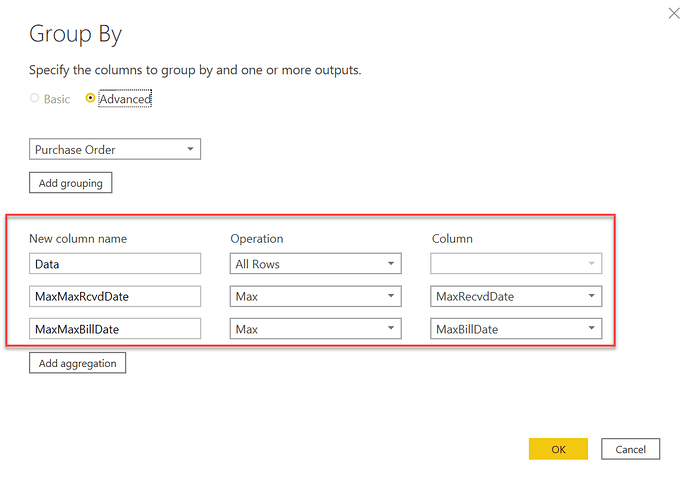

Now we created two more max fields – this time at the PO level, rather than the material level. This represents the second-order condition assumption that a purchase order is considered “received” when the last material ordered is received, and the same assumption is made about billing. Note: for those examining my methodology, the convention is that Max refers to maximums at the material level, and MaxMax refers to maximums at the PO level.

Once this master transactions table was created, it became a trivial matter to compute the days between different pairs of dates.

Now once the Transactions table was created, I duplicated it and created an unpivoted version that was necessary for the timeline visual.

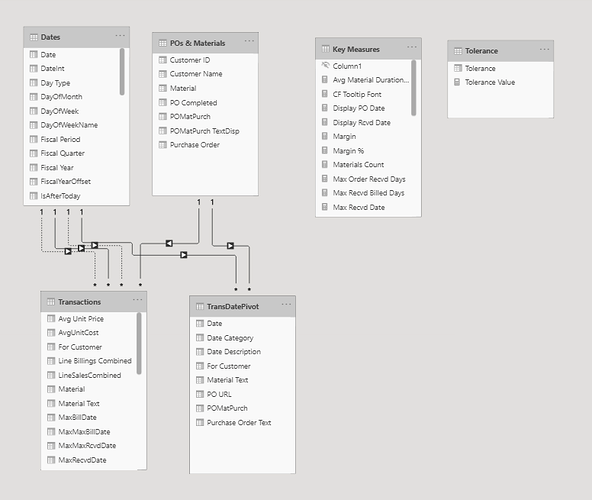

Here’s how it all looks in the final data model:

Ultimately, a pretty simple but very powerful, flexible data model, but a lot of prep work needed to get to this point. Doing so was totally worth it though IMO because it dramatically simplified the rest of the analysis and the DAX I had to write (and the DAX I didn’t have to write…).

With the data model completed, most of the rest of the report was pretty standard stuff. The two elements worth some additional explanation are the timeline visual and the tolerance/custom icon interaction.

TIMELINE VISUAL

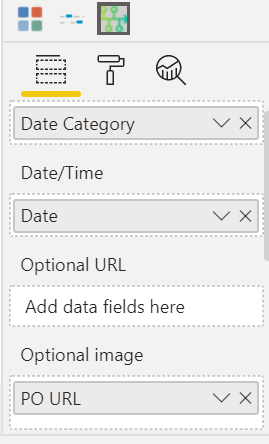

As I mentioned when I posted my entry initially, the timeline was a custom visual called Queryon Timeline. You can really create some amazing visual timelines with this, but it is almost completely undocumented – no useful documentation on the company website, no videos on YouTube and no posts on the Microsoft Community. Because of how customizable the timelines are, there are a ton of configuration options and thus it took a lot of trial and error to figure out how to use this visual.

Here’s the choice of fields that ultimately produced the timeline I wanted. Date Category is from my unpivoted transactions table, and just captures whether the date is ordered, received or billed. Date is the single date column from my unpivoted transactions table (NOT the date from my date table, which was an initial mistake I made) and PO URL, which was a column I created that contained the image URL corresponding to the custom icon for each unique entry in the Date Category column (see section below for discussion of web hosting of custom icons).

After this initial set up, it was just a matter of playing with all the formatting and configuration options available in the custom visual.

I think I will try to do a video demonstrating the options and configurations available via this visual, since nothing like that currently exists elsewhere and this visual fills a role that I’ve never seen done as well by any other visual.

TOLERANCE % AND CUSTOM ICON HOSTING

Seeing that for most POs the total ordered quantity, received quantity and billed quantity differed by varying degrees, I created a “Tolerance %” what-if parameter to indicate within that percentage is close enough to call the compared quantities (ordered vs received, received vs billed) equal. If the two quantities are equal within the set tolerance, the icon returns an on target arrow, whereas if the second item is less than the first outside of the tolerance it returns the down arrow, and an up arrow if the reverse outside the tolerance.

In Challenge #5, I stumbled upon the best way I’ve found so far to manage custom icons. Here’s the process I used:

-

find the icons you want on flaticon.com (great site with millions of searchable icons in multiple styles)

-

using the flaticon editor, alter the colors of the chosen icons to match your color theme. A big help in managing hex codes is a free program I found called Just Color Picker. I have a video coming out this week on the Enterprise DNA TV YouTube channel on different ways to use Just Color Picker to save you a lot of time in the development and application of color themes.

-

Download the customize icons from flaticon.com and then upload them to imgbb.com – an excellent free image hosting website. Imgbb provides the direct URLs for each icon file.

-

You can then write your measure or calculated column to return these URL values as the result of IF or SWITCH statements, such as this:

PO URL =

SWITCH ( TransDatePivot[Date Category], "Ordered", "https://i.ibb.co/QMfQ3tv/order-orange.png", "Max Received", "https://i.ibb.co/FbpjhRX/received-medium-blue.png", "Received", "https://i.ibb.co/FbpjhRX/received-medium-blue.png", "Max Billed", "https://i.ibb.co/5G8NYyv/invoice-dark-blue.png", "Billed", "https://i.ibb.co/5G8NYyv/invoice-dark-blue.png", BLANK () )

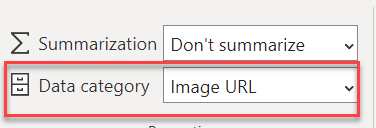

The only thing you need to be sure to do after that is to define the data category for your measure or column as Image URL

Other than that, everything else in the report is pretty standard stuff. Because of all the advance Power Query prep, I ended up writing significantly less DAX than usual and the measures I did write by and large were pretty simple.

I hope you found this helpful. Thanks for sticking with it to the end.

• Brian