Great question. I’d love to hear the techniques that others on the forum use but here are a few ideas to start the discussion:

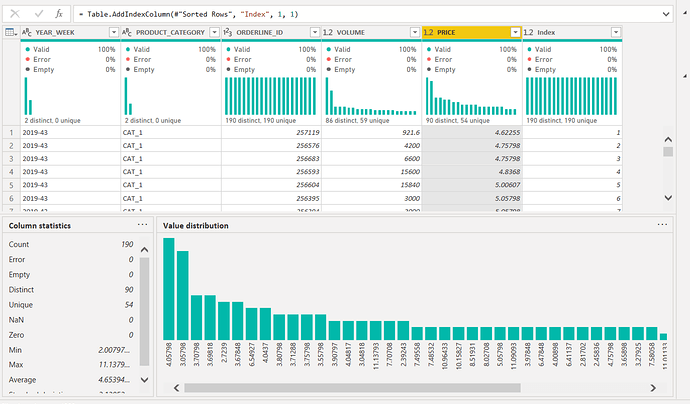

- Turn on column distribution, column quality and:column profile in Power Query. Provides great information on the empty values, errors, potential outliers, etc.

-

Running VALUES or DISTINCT functions on columns can help identify inconsistent data entry problems

-

Lots of techniques for identifying outliers, some of which may be legitimate values while others may be data entry errors

https://forum.enterprisedna.co/t/outlier-detection/3412

- If you use R, people have written a lot of scripts to detect different types of dirty data. With R installed, you can now run these scripts as steps in PQ:

Eager to hear what techniques and tools others are using.

-Brian